How to Use Databricks SET/UNSET MANAGED in a Metadata-Driven Framework

- Databricks

- Unity Catalog

- Metadata

- Performance

Databricks’ new SET/UNSET MANAGED feature enables instant table type conversion without data movement. This article shows how to leverage it in a metadata-driven framework for 30–50% better query performance.

Picture this: You have hundreds of tables in your data lake, some performing poorly, others costing too much to maintain. What if you could instantly convert them between managed and external types without moving a single byte of data?

That’s exactly what Databricks’ SET/UNSET MANAGED feature offers.

The Game Changer: SET/UNSET MANAGED

Let’s start with the basics. In Databricks Unity Catalog, you can now convert table types with simple SQL commands:

-- Convert External to Managed (instant performance boost!)

ALTER TABLE catalog.schema.table SET MANAGED;

-- Convert Managed to External (when needed)

ALTER TABLE catalog.schema.table UNSET MANAGED;

Why should you care?

- Zero data movement = Zero cost

- Instant conversion (metadata-only operation)

- 30–50% query performance improvement potential

- Significant cost savings through automation

Why Table Type Matters More Than You Think

Let me show you what happened when we converted our fact tables to managed:

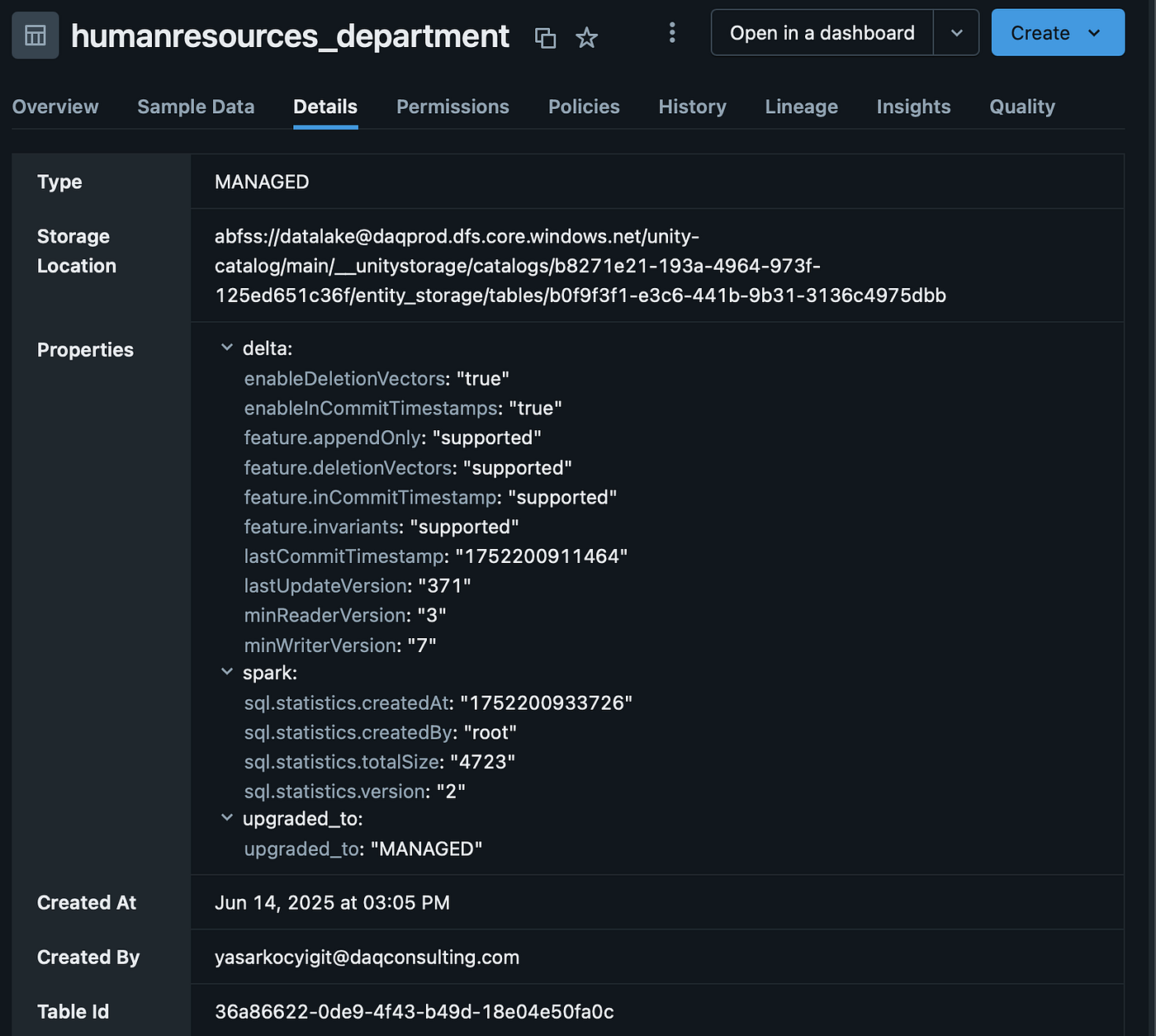

Managed Tables: The Secret Sauce

Managed tables aren’t just about storage location. They unlock:

- Automatic Optimization - No more scheduling OPTIMIZE commands

- Liquid Clustering - Next-gen data organization (managed only!)

- Better Statistics - Always fresh, always accurate

- Predictive Optimization - Databricks AI optimizes proactively

Decision Time: When to Use What?

Use Managed Tables When:

High-Performance Analytics

# Perfect candidates:

- Fact tables with billions of rows

- Tables queried 100+ times daily

- Real-time dashboard sources

- ML feature stores

Streaming & Frequent Updates

# Ideal for:

- Streaming targets (Liquid Clustering shines here)

- CDC/Merge-heavy workloads

- SCD Type 2 dimensions

Stick with External Tables When:

External Access Required

# Keep external for:

- Cross-platform sharing

- Direct S3/ADLS access needed

- Legacy tool integration

- Regulatory requirements

Z-Order vs Liquid Clustering: The Performance Battle

Here’s where it gets interesting. Managed tables unlock Liquid Clustering:

Traditional Z-Order (Both Table Types)

-- Requires full table rewrite

OPTIMIZE table_name ZORDER BY (date, customer_id);

-- Cost: $$$$ (full scan + rewrite)

Liquid Clustering (Managed Only!)

-- Incremental clustering - no full rewrites!

CREATE TABLE my_table CLUSTER BY (date, customer_id) AS SELECT...

-- Cost: $ (incremental updates only)

Real-world streaming scenario:

- Z-Order: 45-minute OPTIMIZE jobs every 4 hours

- Liquid Clustering: Continuous optimization, zero downtime

Building a Metadata-Driven Framework

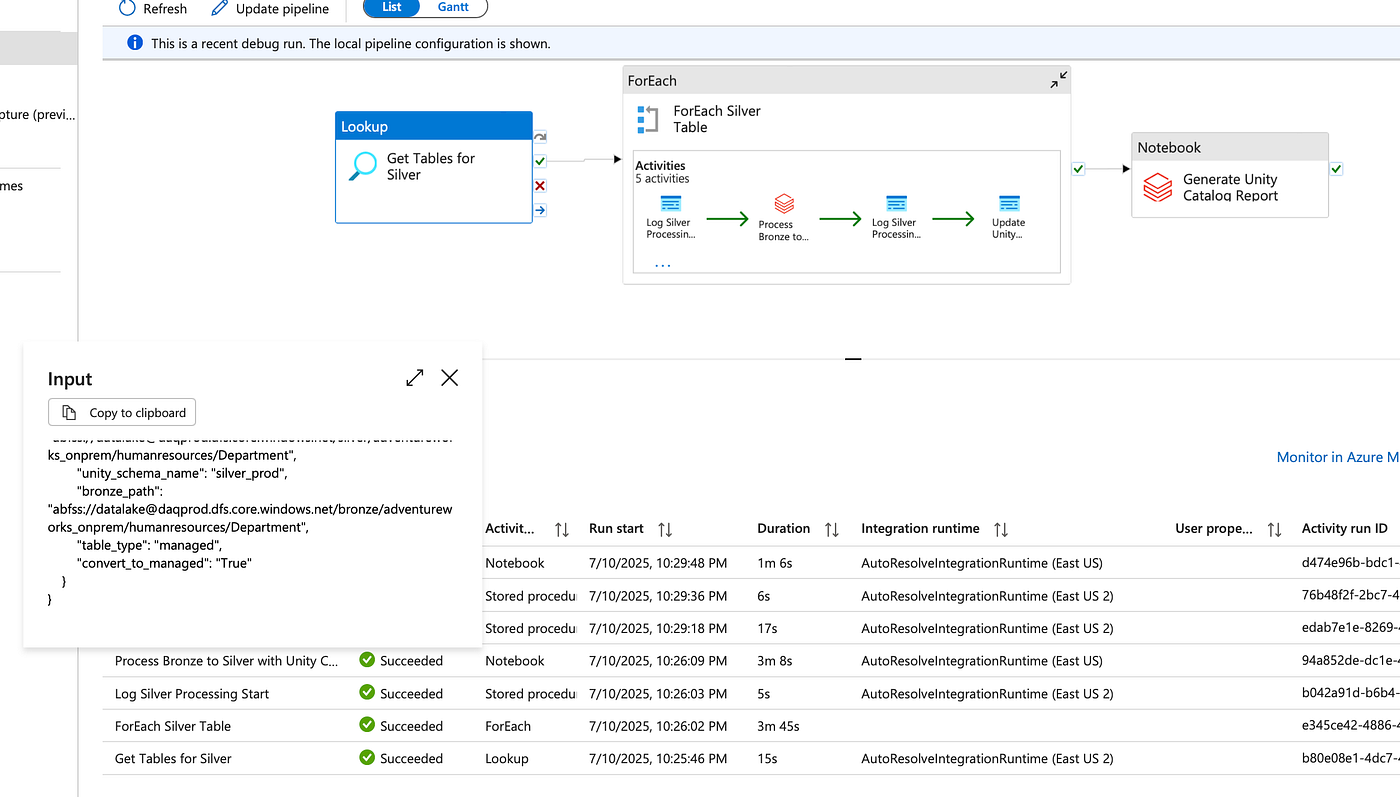

Here’s how we automated table type management across 500+ tables:

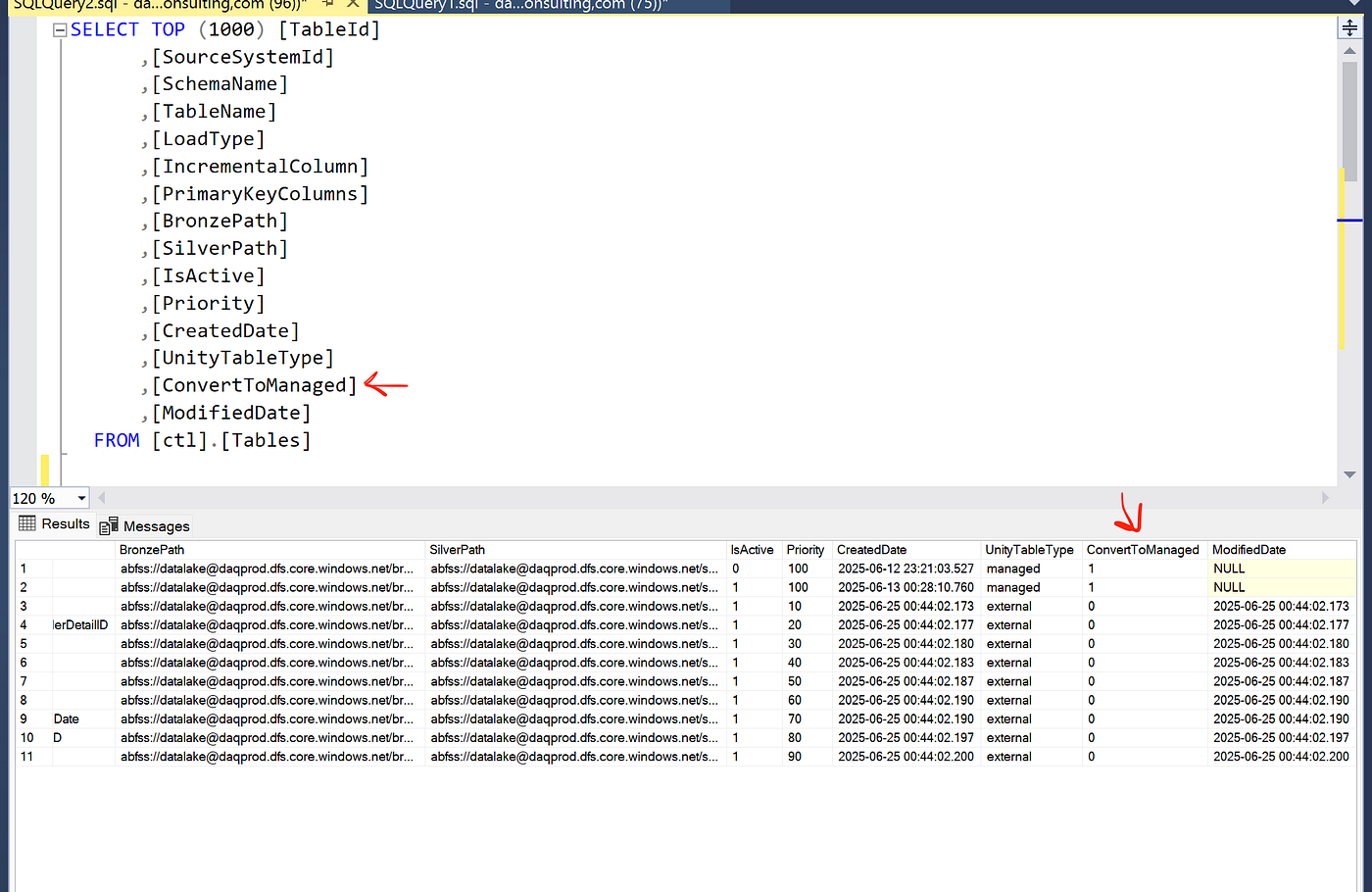

Step 1: Control Table Design

CREATE TABLE ctl.Tables (

TableId INT,

SchemaName VARCHAR(100),

TableName VARCHAR(100),

ConvertToManaged BIT, -- The magic flag!

Priority INT,

LastOptimized DATETIME

);

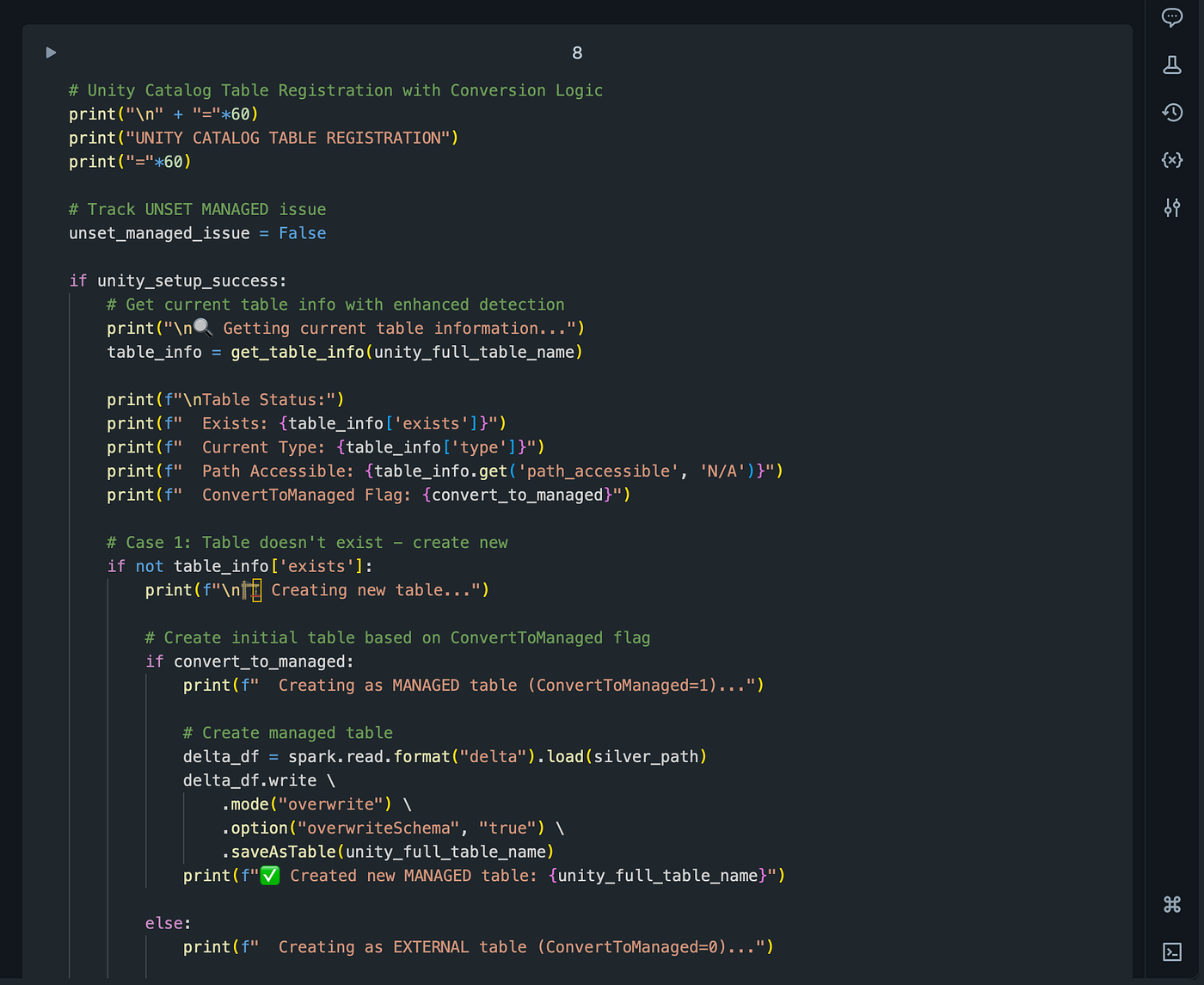

Step 2: The Conversion Logic

def manage_table_type(table_name, convert_to_managed):

"""

Automatically convert table types based on metadata

"""

current_type = get_table_type(table_name)

if convert_to_managed and current_type == "EXTERNAL":

# Convert to managed for performance

spark.sql(f"ALTER TABLE {table_name} SET MANAGED")

log_conversion(table_name, "EXTERNAL", "MANAGED")

elif not convert_to_managed and current_type == "MANAGED":

# Convert to external (note: has limitations)

spark.sql(f"ALTER TABLE {table_name} UNSET MANAGED")

log_conversion(table_name, "MANAGED", "EXTERNAL")

Step 3: Integration with Data Pipeline

Beyond Simple Flags: The Decision Matrix

Instead of a binary flag, consider implementing a decision matrix:

class TableTypeDecisionMatrix:

def __init__(self):

self.criteria = {

'query_frequency': {'weight': 0.25, 'threshold': 100},

'update_frequency': {'weight': 0.20, 'threshold': 10},

'data_size_gb': {'weight': 0.15, 'threshold': 1000},

'external_access': {'weight': 0.20, 'threshold': 0},

'compliance_required': {'weight': 0.20, 'threshold': 0}

}

def calculate_score(self, table_metrics):

score = 0

# High query frequency favors managed

if table_metrics['daily_queries'] > 100:

score += 25

# Frequent updates favor managed

if table_metrics['daily_updates'] > 10:

score += 20

# Medium-sized tables favor managed

if 10 < table_metrics['size_gb'] < 1000:

score += 15

# No external access favors managed

if table_metrics['external_tools_count'] == 0:

score += 20

# No special compliance favors managed

if not table_metrics['special_compliance']:

score += 20

return score, 'MANAGED' if score >= 60 else 'EXTERNAL'

Enhanced Control Table for Decision Matrix

CREATE TABLE ctl.TableTypeDecisions (

TableId INT,

QueryFrequencyDaily INT,

UpdateFrequencyDaily INT,

DataSizeGB DECIMAL(10,2),

ExternalToolsCount INT,

ComplianceFlags VARCHAR(100),

CalculatedScore INT,

RecommendedType VARCHAR(20),

OverrideType VARCHAR(20),

DecisionDate DATETIME

);

Real-World Implementation Results

After implementing this framework across our data platform:

Performance Gains:

- Average query time: -42%

- OPTIMIZE job time: -67%

- Storage costs: -23% (better compression)

Operational Benefits:

- Manual optimization tasks: -85%

- Failed jobs due to file conflicts: -94%

- Time to implement new tables: -60%

Best Practices and Gotchas

1. Start Small, Think Big

# Phase 1: Non-critical tables

# Phase 2: Read-heavy workloads

# Phase 3: Mission-critical analytics

# Phase 4: Full automation with decision matrix

2. Monitor Everything

CREATE VIEW ctl.TablePerformanceMetrics AS

SELECT

TableName,

TableType,

AVG(QueryDuration) as AvgQueryTime,

COUNT(*) as QueryCount,

SUM(BytesScanned) as TotalBytesScanned

FROM query_history

GROUP BY TableName, TableType;

3. Handle the UNSET MANAGED Limitation

Currently, UNSET MANAGED might not work consistently in all environments. Plan accordingly:

- Test thoroughly in your environment

- Consider one-way migrations (External to Managed only)

- Keep override mechanisms for edge cases

4. Cost-Aware Decisions

def calculate_roi(table_name):

# Calculate return on investment for conversion

current_costs = get_table_costs(table_name, 'EXTERNAL')

projected_costs = estimate_managed_costs(table_name)

performance_value = estimate_performance_gains(table_name) * hourly_value

monthly_roi = (current_costs - projected_costs + performance_value) * 30

return monthly_roi

The Implementation Checklist

Before you start converting tables:

- Verify SET/UNSET MANAGED is available in your workspace

- Identify top 10 high-query tables

- Create metadata control tables

- Test with a non-critical table

- Monitor performance metrics

- Calculate cost impact

- Plan rollback strategy

- Document decision criteria

- Train your team

- Celebrate the performance wins!

Conclusion

The combination of Databricks’ SET/UNSET MANAGED feature with a metadata-driven framework isn’t just about performance it’s about building a self-optimizing data platform. Start with your highest-impact tables, measure the improvements, and gradually expand.

Remember: in the world of big data, sometimes the smallest changes (like flipping a metadata flag) can have the biggest impact.

If you found this article helpful, please give it a clap and follow me for more Azure data engineering content. Feel free to connect with me on LinkedIn for questions and discussions.

Note: SET/UNSET MANAGED is currently in Public Preview. Check Databricks documentation for the latest updates and availability in your workspace.